The Drobo hype

I saw the first Drobo presentation video on YouTube almost 2 years ago. Since then I was longing to get one, but the price for an empty box being 490,- € without a single harddrive was too much in my oppinion.

As a regular podcast listener I heared everywhere about the sexyness of the Drobo: MacBreak Weekly mentions it in every episode, the guys at BitsUndSo are collecting them like crazy, and finally Tim Pritlove also got one. But then he mentioned on his podcast Mobile Macs his strange difficulties with the Drobo which made me think a little bit more about the subject.

The Drobo has in my opinion some major drawbacks:

- it doesn’t know its hosted data and the used filesystem, so on a resilvering task it has to duplicate all parts of a harddrive, also the ones with noise

- one has to set an upper limit for the hosted filesystem, so Drobo acts to the host machine as one physical drive with a given size

- you cannot use all the disk space if you use drives with different sizes

- it is limited to a maximum amount of 4 drives

- if your Drobo fails you cannot access your data

We can do better!

So what would I want from my dream backup device?

- it should not have an upper storage limit

- it should be able to heal itself silently

- it should be network accessible (especially for Macs)

- the drives should also work when connected to other hardware

- it should be usable as a Time Machine target

- it HAS to be cheaper than a Drobo

It doesn’t have to be beautiful or silent, since I want to put it in my storage room and want to forget about it.

Initial thoughts

In my oppinion the most modern and future proof filesystem at the moment is ZFS. After listening to the very good podcast episode of Chaos Radio Express CRE049 in German about ZFS by Tim Pritlove I always wanted to use it. Unfortunately Apple is very lazy with its ZFS plans. It is included in Leopard but can only read ZFS and the plans for Snow Leopard are very vague. So Mac OS X is no option at the moment. Since I want it to be cheap, Mac hardware is also no option.

FreeBSD seems to have a recent version of ZFS, but I gave OpenSolaris a try, since ZFS is developed by Sun I think Solaris is the first OS where new features of ZFS will appear. Bleading edge is always best 😉 so I looked further into this setting.

Test driving OpenSolaris

I wanted to make some more investigations so before using real hardware I wanted to test drive it with a virtualization software. After downloading the current ISO version of OpenSolaris 2008.11 I tried to install it on my Mac with VMWare Fusion but at that time I didn’t know that Solaris and OpenSolaris are the same so I had difficulties setting up the VM properly.

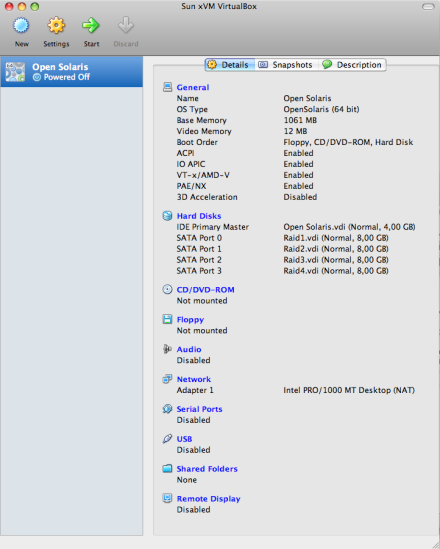

So I tried out VirtualBox and hoped, since it is now owned by Sun, it will work like a charm virtualizing Solaris. I set up a new VM with one boot disk and four raid disks.

I switched on all the fancy CPU extensions. There is only one ISO image for both x86/x64 so I turned on x64 and it automatically used the 64-Bit kernel. The hardware recommencations for ZFS say, that it works best with 64 Bit and at least 1 GB of RAM.

Installing OpenSolaris from the Live system worked very well. I was very surprised by the polished look thanks to Gnome (although I am a KDE fanboy). ZFS is now used by default as the boot filesystem on Solaris so I had to do nothing to activate it.

When the installation was complete and the system was up and running I made a little tweak to get access via SSH to the VM. Since it used NAT I set up a port forward from 2222 to 22 on my Mac. I edited the XML file of my virtual machine (~/Library/VirtualBox/Machines/Open Solaris/Open Solaris.xml in my case) and inserted the following lines to the DataItem section:

<ExtraDataItem name="VBoxInternal/Devices/e1000/0/LUN#0/Config/ssh/HostPort" value="2222"/>

<ExtraDataItem name="VBoxInternal/Devices/e1000/0/LUN#0/Config/ssh/GuestPort" value="22"/>

<ExtraDataItem name="VBoxInternal/Devices/e1000/0/LUN#0/Config/ssh/Protocol" value="TCP"/>

After starting the VM I could connect with “ssh -l <user> -p 2222 localhost” to it.

I used to work for several years with a Linux system and after that on Mac OS X. I had no problems adapting to the Solaris world, since they took many products from the open source world like bash and integrated it. So the learing curve using this system seems very flat.

To get some info about the system I entered the following commands:

Get info about the kernel mode

$ isainfo -kv 64-bit amd64 kernel modules

Listing all system devices

$ prtconf -pv

System Configuration: Sun Microsystems i86pc

Memory size: 1061 Megabytes

System Peripherals (PROM Nodes):

Node 0x000001

bios-boot-device: '80'

...

Show the system log

$ cat /var/adm/messages

Setting up the storage pool

Since I want to have one huge storage pool which can grow over the time I used RAIDZ.

The following commands where entered as root.

First to get all connected storage devices:

# format Searching for disks...done AVAILABLE DISK SELECTIONS: 0. c3d0 <DEFAULT cyl 2044 alt 2 hd 128 sec 32> /pci@0,0/pci-ide@1,1/ide@0/cmdk@0,0 1. c5t0d0 <ATA-VBOX HARDDISK-1.0-8.00GB> /pci@0,0/pci8086,2829@d/disk@0,0 2. c5t1d0 <ATA-VBOX HARDDISK-1.0-8.00GB> /pci@0,0/pci8086,2829@d/disk@1,0 3. c5t2d0 <ATA-VBOX HARDDISK-1.0-8.00GB> /pci@0,0/pci8086,2829@d/disk@2,0 4. c5t3d0 <ATA-VBOX HARDDISK-1.0-8.00GB> /pci@0,0/pci8086,2829@d/disk@3,0 Specify disk (enter its number): ^C

The green ids are the device ids we need to set up the storage pool. To create a pool named “tank” I entered:

zpool create -f tank raidz c5t0d0 c5t1d0 c5t2d0 c5t3d0

To show the available pools type:

# zpool list NAME SIZE USED AVAIL CAP HEALTH ALTROOT rpool 3,97G 2,90G 1,07G 73% ONLINE - tank 31,8G 379K 31,7G 0% ONLINE -

rpool is the pool on the boot device. You can see, that the space you get connecting 4 drives with 8 GB is almost 32 GB. When you store something on that pool it is stored redundantly and uses about 30 % more space to ensure the safety when one device is failing.

Now I created a filesystem on that pool

# zfs create tank/home

It is linked automatically to /tank/home. To get all live zfs filesystems enter

# zfs list NAME USED AVAIL REFER MOUNTPOINT rpool 3,44G 482M 72K /rpool rpool/ROOT 2,71G 482M 18K legacy rpool/ROOT/opensolaris 2,71G 482M 2,56G / rpool/export 746M 482M 21K /export rpool/export/home 746M 482M 19K /export/home rpool/export/home/mk 746M 482M 45,4M /export/home/mk tank 682M 22,7G 26,9K /tank tank/home 681M 22,7G 29,9K /tank/home

In this example I copied the OpenSolaris ISO image to my new filesystem. It occupies 681M. On the pool it occupies 911M.

#zpool list NAME SIZE USED AVAIL CAP HEALTH ALTROOT rpool 3,97G 3,44G 545M 86% ONLINE - tank 31,8G 911M 30,9G 2% ONLINE -

A very nice feature of ZFS is built-in compression. Properties of file systems are inherited so if you set compression on tank/home and create a new system inside of it it is compressed automatically:

# zfs set compression=on tank/home # zfs get compression tank/home NAME PROPERTY VALUE SOURCE tank/home compression on local # zfs create tank/home/mk

# zfs get compression tank/home/mk NAME PROPERTY VALUE SOURCE tank/home/mk compression on inherited from tank/home

Health insurance

ZFS data validity is ensured by internal checksums so it can see on the fly if data is still valid and can reconstruct if necessary.

To get the status of a pool enter

# zpool status -v tank pool: tank state: ONLINE scrub: none requested config: NAME STATE READ WRITE CKSUM tank ONLINE 0 0 0 raidz1 ONLINE 0 0 0 c5t0d0 ONLINE 0 0 0 c5t1d0 ONLINE 0 0 0 c5t2d0 ONLINE 0 0 0 c5t3d0 ONLINE 0 0 0 errors: No known data errors

A scrub is a filesystem check which should be done with consumer quality drives on a weekly basis and can be trigged by

# zpool scrub tank

and after some time check the result with

# zpool status -v tank pool: tank state: ONLINE scrub: scrub completed after 0h2m with 0 errors on Tue Jan 13 11:36:38 2009 config: NAME STATE READ WRITE CKSUM tank ONLINE 0 0 0 raidz1 ONLINE 0 0 0 c5t0d0 ONLINE 0 0 0 c5t1d0 ONLINE 0 0 0 c5t2d0 ONLINE 0 0 0 c5t3d0 ONLINE 0 0 0 errors: No known data errors

Gnome Time Machine

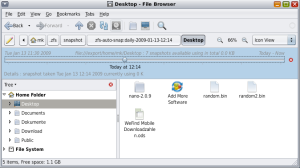

When logging into a Gnome session and browsing through the menues I noticed the program “Time Slider Setup”.

When you activate it, it will create ZFS snapshots on a regular basis. These snapshots don’t waste disk space and you can travel back in time (not as fancy as with Time Machine, but who cares) with the Gnome file browser Nautilus.

That is a killer feature and if I still would be a Linux/Java developer, this would be a good reason for me to switch to OpenSolaris. You don’t have to use a RAID with ZFS to make snapshots, so your filesystem has a built-in time-based versioning system. *cool*

Network accessiblity

My next step was to set up Netatalk on Solaris using this guide. I first had some difficulties and had to install gmake and gcc. The described patches didn’t work correctly since the file /usr/ucbinclude/sys/file.h was missing, so I changed the #ifdef statement from

#if defined( sun ) && defined( __svr4__ ) #include </usr/ucbinclude/sys/file.h> #else /* sun __svr4__ */

to

#if defined( sun ) && !defined( __svr4__ )

#include </usr/ucbinclude/sys/file.h>

#else /* sun __svr4__ */

in all source files where it occurred:

- etc/atalkd/main.c

- etc/cnid_dbd/dbif.c

- etc/papd/main.c

- etc/papd/lp.c

- sys/solaris/tpi.c

I need further work with this to get it running with Time Machine.

Getting real

So everything looks promising at first glance. The next logical step would be to buy the hardware components and try everything out. I configured bare systems with midi towers using AMD or Intel processors for approximately 170,- to 190,- €. Thats about half the price of a Drobo. For each SATA hard drive I would buy a hot pluggable case. Another option would be to use external USB drives but that might lead to a quiet cluttered and fragile construction.

I need to investigate further to use the correct components. Motherboards tested with OpenSolaris are listed here: http://www.sun.com/bigadmin/hcl/

ZFS has a hotplug feature so if a device fails it can be replaced without rebooting and typing in any commands. But if that fails one can also enter some commands into the commandline.

Next steps

I really need to invest more time with the VM and try to corrupt some disk images and how ZFS reacts to that. Also expanding existing pools with different sizes will be interesting.

Of course I need to get Netatalk working and try to use it as a Time Machine target. I could use the VM on a different host to simulate to final system.

Stay tuned for my future investigations and please don’t start trolling about the superiority of the Drobo. I think that is a matter of taste.

Just FYI–Netatalk is available for Drobo and DroboShare which can make it a Time Machine network target. See: http://code.google.com/p/backmyfruitup/ and http://www.drobo.com/droboapps

Also, don’t forget to factor in the value of your time in trying to create your Drobo replacement 🙂 Seriously! 🙂

Wow, even the Drobo people are reading my unworthy thoughts.

I am aware of DroboShare and AFP support, but that adds more to the high price tag.

The amount of time I have to put into this project will not be little but I see it as a possiblity to learn something new.

You also have to factor in the SPEED of the device. the drobo will cost twice as much money for less than half of the throughput. esp. with the joke of a NAS solution aka Droboshare. for $199 you get about 2-5mb/write with 5-20mb/s read. (depends on file size, burst speed will be fast but continuous reading/writing will eventually slow it down to a crawl)

Very nice.

I recently did the same but on a real machine. I wasnt that comfortable with the terminal, but I got used to it and it works very good. I use SMB and NFS (which are both build in) to share the files to my Macs. SMB seems to have a problem with umlauts but maybe not generally. I’m investigating that 😛

Otherwise it works great. All the little stuff can be remote-configured via SSH, if you want the GUI you can use X to be your X-server for OpenSolaris. Very nice and way faster than VNC (which also works out of the box).

The flexibility of ZFS is also great. I use 3 1TB-drives in a raidZ and 2 500GB-drives in a mirror put together in one zpool. Further drives can easily be added (but only whole pools can be added, not a drive to an existing pool) or the drives can be replaced with bigger ones.

Couple of comments:

– OS2008.11 includes native CIFS support, so it may be easiest to have ZFS share your mounts out via CIFS, the Mac is happy to work with them and there is little if any penalty to performance (if you can bear CIFS on the network!), any Windows clients will be covered as well then

– ZFS snapshots can be made visible in the filesystem through a .zfs directory, hence your clients can see snapshots and recover files manually from the snaps

– Time Machine will be quite happy to run against a sparsebundle file created in the filesystem (basically a thin provisioned disk image), once it knows where it is, TM will take care of remounting the FS to gain access, back up and unmount automatically … search Google for discussions around TimeTamer and Drobo and also searh out TimeMachineEditor which tweaks the plist pref for backup interval

– ZFS performance is very dependent on heavy ARC utilisation (in memory cache), so factor more memory in to any purchases as a first attempt at improving perf.

– OS2008.11 has an storage-nas package profile, which minimises an install whilst enabling all the bits you’d like for a NAS (see http://www.opensolaris.com/learn/features/whats-new/200811/, section 3.1.2)

HTH, my Drobo was a short term solution pending a ZFS life solution … so this might be a path well travelled!

Craig

My main attraction point in Drobo is the possibility to put in any size drive and the whole raid will adapt to it. How can something similar be simulated with ZFS? Pooling toghether different pools? RAIDing pools? Never touched ZFS so far, there’s GOT to be a wizard out there who could come up with an idea? How do you people get around the fact that raidZ cannot be grown? How do you combine your raids and pools so you can add disks to your NAS? Thanks for your brilliant insight!

hmmm – attempted post “discarded” try again with a quick post.

ok, that worked so here’s a quick recap.

I’ve had a drobo and have successfully upgraded to 4*1TB, however I’m less excited about their now-closed forums & support.

I’ve also had zfs on solaris, opensolaris, & nexenta, but now struggling with the cifs / sbm disappearing share issue.

Solaris smbd issues a message “NbtDatagramDecoder[11]: too small packet” (google for more) about every twelve minutes and eventually the share no longer works and “sharemgr show -vp” hangs.

so, a head’s up and insight appreciated,

joseph

Just a naive question: in your setup what if your hard disk named c3d0 in pool ‘rpool’ were to go down? Will you be able to use data from the pool named ‘tank’?

Cheers

Of course the rpool also has to be mirrored at least to keep the system up and running. But you could also attach the drives to another system and access the zpool from there.

the second option definitely looks worth investigating on my part. Thanks!

Pingback: links for 2009-03-09 « Bloggitation

I’ve lusted after the Drobo since learning about them last year, but I’ve been disappointed with the lack of performance(we bought one for the office to mess around with; copying to/from it while someone else is accessing a database file leaves much to be desired).

As a result, I’ve been in the market to build a NAS. This opens up an avenue of opportunity as long as I can get my raid cards to work with opensolaris!

Now, if I could just grow my array the way the drobo does, I’d be a very happy camper!

Actually, if you are using ZFS then you should avoid any Hardware raid cards. They mess up ZFS and ZFS can not do all fancy error checking and error repair. The HW raid interfers. The recommendation is to NOT use HW raid. Sell the card.

Pingback: tecosystems » links for 2009-05-06

So, how is the machine working?

Got all the pluses of the drobo and none of the con’s?

Hi, great article. Some little update showing how the whole thing is performing now will be nice! Thanks for the post!

Omer > Well drobo gives you support, good compact design and “just plug and it works” taht you won’t find here, most retail products have the same “pros” that the DIY don’t have.

Good idea, I’m considering building a server/nas of my own, already got all the hardware … now playing with ESXi 🙂

I too am on a similiar adventure of using an advanced file system (ie ZFS, brtfs, etc.) to make my own hyper-drobo with a less than hyper price tag. As a suggestionm have you looked at nexenta or freenas as a stand alone all in one solution> I understand they both support ZFS and will support brtfs when finalized in linux kernel. Hope this discussion thread is still alive.

Hi Lazarus,

actually those stand-alone solutions did not offer enough space for 6 harddrives or they did not seem to have the right drivers to run OpenSolaris on them. At the moment I am quite glad with my choice.

Cheers,

Markus

Hi,

I’m also building a new NAS at the moment, using my hardware which I bought two years ago and used with Debian. Now I replaced the four hard drives with six bigger disks and switched to OpenSolaris.

I also have a Drobo. There are some more pro’s and con’s:

+ power consumption of the Drobo should be lower than my pc hardware

+ management and status (!) software

+ DroboPro may be used with 8 hard disks and single or double redundancy

– just a storage box

– no power switch

– no information about S.M.A.R.T.

Also I’m looking for an USB LCD device as a status display. Do you know if there exists a small Windows App for retrieving status information?

Best regards and thank you for the nice article,

Nils.

Hi

Nice article thanks. Just out of interest I had a ZFS OpenSolaris box for all my services (1.5 TB Video, 0.5TB Music, 1TB Data) for almost 2 years. I moved away from that a year ago towards Drobo. My main reason was two things. ZFS can’t be upgraded. Now hear me out here. You are using RAIDZ – I too used RAIDZ and even 2 spare drive version. But I can now not upgrade that and all disks must be the same size.

You can upgrade the ZFS file system by adding another set of three or more drives, but I can’t add just one drive. You mention in your talk here about not being able to use the spare space. Yes in ZFS you can make partitions on a new big disk small enough to work with old disks – and then use that other space for something else. In reality though this becomes very difficult to manage.

Let me start with the best thing – ZFS had snaptshots – this is the one feature I am missing from Drobo. I loved the security of having snapshots.

Now let me talk about your main points:

* Drobo does know hosted file systems. This is one of the reasons it has limited file system support (e.g. Ext3, NTFS, HFS+). If it did not know, it would not be able to provide a ‘larger than really available’ solution. So resilver a replaced disk is only the data it needs.

* Upper file size limit – this is a limit of the file system, not the drobo. I.e. NTFS, HFS+ and Ext3 do not understand resizing (well not really, there is ways).

* You can not use the spare disk with different sizes – there is scenarios where disk space can’t be all used, e.g. if you added a 1TB and 3*500GB disks. But the same goes for a ZFS partition. You could in theory use the 3 500GB in a RAIDZ and then the 1TB as a single, but then if the 1TB single drive fails, you loose everything on that ZFS volume.

* 4 drive limit sux. This was my main reason to start with ZFS – I wanted to add 7 * 320GB disks. Drobo has more recently added a 5 disk and 8 disk model, but very expensive.

* Network accessible – you are talking here about DroboShare. ZFS is a file system. Drobo is an external Hard Disk. Network access is effectively a computer. You can of course run a computer with all bits, including internal hard disks, ZFS and various file systems. But Drobo is just like an old SCSI RAID Controller – it is not the operating system, nor network access – so not really comparable. That said however, I would like to see Drobo become more intelligent. The down side of Drobo is that it does NOT have its own file system, therefore it has all the faults of external drives (both human and computer software errors).

* Drobo is too expensive – agreed !

This information is just to explain location. It seems you are comparing Drobo with a NAS. Even Drobo+DroboShare is a simple NAS. What Drobo is good at, and what it does well, is zero management of disk space. To add a new drive, you pull one out and add a new one – you now have a bigger disk system (like you said, as long as you made it big enough in the first place). Drives do not have to be the same size – ZFS RAIDZ they do.

What Drobo does badly though, even with Drobo Share, is file system. ZFS is more secure / reliable – because of snapshots and

In the end, living with ZFS for more than a year, it was just too much maintenance, and almost impossible to add more space to (7 disks takes up all the slots on the board, and I need to add more to move data across, before I can unmount the old ones). Living live for more than a year with Drobo, I can say that I have had no issues, and I have added new disk space 3 times, each time buying the best GB/$ drive and replacing the smallest disk.

BUT… I expect to have a problem one day. Drobo does not protect me from accidental delete (ZFS snapsthos) or from corruption (e.g. HFS+ external drives loosing their directory structures). So ZFS is still better, if you have the time to manage it, and don’t mind upgrading the drives in one big go, instead of one drive at a time.

Good article. Thanks.

Scott,

thanks a lot for this long comment. You are right: I should have named it “Build your own Drobo-Share…”. My main feature I am very glad to have: I have a central ruby backup script on the server which collects all the data from my several computers via rsync so I just have to make sure the server can connect via ssh to them.

Lets wait and see how much maintenance my solution requires…

Cheers,

Markus

Must say that I am however a little jealous 🙂 Having a full power of unix & ZFS (with snapshots and CRC error recovery etc) is something I do miss. Thanks. Scott

Just to clarify a point in Scott’s comments … the situation in ZFS is that upgrading the space in the ZFS pool could be achieved under two conditions (sensibly!), you can provide ZFS with an additional vdev, which should have the same characteristics as those already present in the pool, or you can swap out/replace the existing devices for larger devices and hence realise extra space.

vdev is ZFS’ term for the top-level device (RAID construct) that you’ve organised your pool of disks into. Hence a four disk RAID-Z has one effective vdev, the virtual device that ZFS writes to. If you provision multiple vdev’s in a pool, ZFS will spread data across all vdevs. So, one way to grow a pool already containing a 4-disk RAID-Z would be to add four more disks organised as a new vdev (ie. you now have two sets of 4-disk RAID-Z in total), note that we are basically persisting the performance/reliability, recovery attributes of the pool here … but you’ll get a perf/thruput increase on IO to the pool because ZFS can now utilise the two top-level vdevs as a stripe. Just throwing 8 disks directly into a pool is the extreme (but not redundant!) case of this as with no other organisation each disk becomes a top-level vdev and hence we’ve striped across every disk.

So, downside of the above for home users is typically lack of expandability in their physical solution, if you had a JBOD with 4 slots initially filled with drives, you’d need 4 more slots to “expand”.

The second case, growing the capacity thru substitution is probably much more likely for home use … here our initial (single vdev) 4-disk RAID-Z would get each disk swapped out one-by-one for a larger capacity drive, ie. 1Tb swapped for a 2Tb replacement. ZFS will initially just re-silver each drive on substitution, note with no increase in available space in the pool, *until* you swap the last drive at which point the latent storage (an extra 4Tb) will “magically” get added to the pool free space.

So, we can expand in place ala the Drobo, but in any given vdev we short-stroke to a consistent smallest size of device, once all devices show 2Tb capacity in the RAID-Z, ZFS will automatically grow the pool and hence any filesystems within will have expanded capabilities. I agree, its not as flexible as the Drobo case which maximises space after every disk swap and hence one disk can yield an immediate improvement (whereas ZFS requires us to postpone that increase until all devices in a vdev are replaced), but if you’ve done this on a real Drobo then you also know that there is a penalty in the reconstruct stage for each drive as it re-layouts the data to take advantage of the extra space … without the integrity guarantees of ZFS, thats a set of data migrations at which your data could be put at risk … multiple times.

Overall, that means today ZFS requires either a set of new drives over and above existing vdev -or- a swap out of the complete existing vdev set before you’ll realise your gains. In its favour apart from the obvious of an open and accessible data format on disk (Drobo recovery is non-trivial/existent outside their labs), you shouldn’t overlook built-in compression, snapshotting/cloning, intelligent re-silver and checksumming for integrity, zfs send/receive (Amazon S3 is a nice target!), RAID-Z3 for large data sets and now the first widely available de-duplication mechanism …

Hope that helps clarify some of the statements …

Great thread, nice responses with honest real experience not just “I plugged it in and now I’m an expert” type comments. I’ll be going Drobo.

I’m building out a second server to use for offsite duplication of my data. In both cases, I am using hot swap bays from StarTech, such as:

http://www.startech.com/item/HSB320SATBK-3-Drive-Tray-Less-SATA-Hot-Swap-Enclosure.aspx

to put my drives into. There is a 4 drive bay as well, these have fans and are easily installed and seem robust.

The use of snapshots and “zfs send” and “zfs receive” is what I am most interested in. Having to pay for 4-6TB of storage and upload to Z3 seems pretty pricey. So far, it has been nice to have everything in one place. I also will be using VirtualBox to run an instance of Windows Home Server to allow the few remaining PCs in the house to do backups.

This will allow me to power off my HP Home Server box, my drobo and my time machine. One point of failure in the house now, but the remote copy is what I will be relying on for robust data integrity.

And also, for a different way to add a 4 drive raidz set, this device would make it pretty easy to do this one slot at a time. The next biggest problem would then be power and sata controllers.

http://www.startech.com/item/SATABAY425BK-4-Drive-25in-Removable-Mobile-Rack-SAS-SATA-Backplane.aspx

OR, you could grab a free version of NexentaStor which is a fully packed ZFS based storage solution.

VMware, Xen and baremetal versions available here: http://www.nexentastor.org/projects/site/wiki/DeveloperEdition

This version is currently limited to 4TB however it will be increased to 12TB shortly.

I really might have to go Drobo after reading this

It seems that there is a simple workaround for the ZFS expandibility issue:

http://wiki.mattrude.com/index.php?title=Freenas/ZFS_and_FreeNAS_expansion

This is the basic idea… I will be creating five raid5 sets inside one ZFS pool. I will be creating four partitions on each of the four drives and using these partitions as the storage containers for the raid arrays. This way I can control the sizes of each partition and get the best use out of the drive space within a raid5 array. ZFS allows you to expand the size of a raid array and the method I’m describing let’s you take advantage of that cool feature.

What I will be doing is slicing up the four hard drives into as many equal sized parts as I can. From there I’ll build five Raid5 arrays (one for each line of partitions in the phase 1 illustration) and create a zpool called tank0 containing all five raid5 arrays.

I’ve got a drobo S and once I get a replacement it will need a new home.

The drive has been used with a mac, used for Time Machine and I keep getting

IOFireWireSBP2ORB::prepareFastStartPacket – fast start packet not full, yet pte doesn’t fit

errors on the console log.

Drobo said all was fine.

Running Disk Utility said all was fine.

Restoring from Time Machine failed consistently.

Connecting with USB is currently working. But I don’t trust hardware that fails silently.

As to the expandability issue: Conceptually it’s easy. I think FreeNas understands GUID partition tables, which means you can have up to 128 partitions per disk.

So construct partitions of 100 GB each on every disk. For a 3 TB disk that would be 30 partitions. Now, for mirroring you can construct vdev’s using one partition each from multiple disks. So if you had a 1 TB and 1.5 TB and a 2 TB drive, you would start off by matching 7 of the 2TB’s partitions with the 1 TB drive, and 12 with the 1.5’s. And the 1.5 and the 1.0 would share 3. Net result is you are able to use 4.4 out of the 4.5 TB available.

Now replace the 1 TB with a 2 TB. The splits then become 3, 12, 12 and you can use 5.2/5.5 TB

This is done with a simple set of 3 eq. 3 unknowns. Call the splits a, b c. Then in the first problem a+b = 10 (1 TB = 10 100G partitions) a+c=15 and b+c=20 You then round answers, but you have to round more down than up. (They are actually inequalities — boundary conditions)

Now add a new 3 TB drive to the mix. You now have 4 drives. 1.5, 2, 2, 3

The first 1.5 TB of each drive is evenly used. leaving you with 0.5 0.5 and 1 unused.

Mathematically then you have 3 drives left. This one is simple. The two small parts match up the large part.

Striping these vdevs would NOT be a good idea, as it would make for a lot of disk seeks. Concatenating them would make more sense.

Breaking a large drive in to many small pieces and piecemealing together, a larger pool, is something I’ve also considered as a way to make ZFS become the Drobo replacement. At issue, is just deciding on the Math as you’ve illustrated Sherwood. It seems doable, and you just need to decide on small enough “pieces” that you could quickly shuffle space use around to handle a drive loss. But, you still can’t delete vdev’s in ZFS, nor make them smaller, so the loss of a drive on a ZFS system, still requires replacement media in the form of hot spares for raidz, or mates for mirroring.

So, you can’t effectively do what Drobo does, in downsizing available space on the loss of a drive, while maintaining loss of another drive, providing there is still space available for that, after a drive dies.

Great post and great comments! Got here by accident but I am very happy I did. Currently I am a Drobo user, and a satisfied one, but good to know that ZFS exists, even though it doesn’t really fit here right now (my “NAS layer” keeps changing but not the “DAS layer”, so Drobo has been a great fit).

But this snapshot feature really got wondering what if…

Bleeding Edge is called that because sometimes it makes you BLEED.

Hi there! I hope you don’t mind but I decided to submit your weblog: https://pegolon.wordpress.com/2009/01/13/build-your-own-drobo-replacement-based-on-zfs/ to my on-line directory. I used, “Build your own Drobo-Replacement based on ZFS | Agile Developer, Berlin, Germany” as your website title. I hope this is fine with you. If you’d like me to change the title or

remove it completely, e-mail me at nola_huskey@t-online.

de. Many thanks.